Retro Diffusion Extension for Aseprite

A downloadable tool for Windows, macOS, and Linux

Rapid designing with AI

Create, change, and refine artwork in seconds.

What is Retro Diffusion?

This extension for the popular pixel art software Aseprite allows pixel art AI image generation from inside Aseprite. It also adds advanced features like smart color reduction, and text guided palette creation. In combination with the state of the art pixel model, you can design incredible pixel art pieces in record time.

The website (retrodiffusion.ai) and extension are different tools, and use different models!

DO NOT buy the extension if you are trying to get the same results as the website, it is not possible!

!!! BEFORE PURCHASING ENSURE YOU READ THE COMPATIBILITY SECTION BELOW !!!

Feature chart

This chart outlines the differences between the full version ($65) and the "Lite" version ($20). Both versions are excellent for pixel art image generation, and come with advanced tools for cleaning up and editing pixel art.

One-time payment

No subscriptions and no credits required, just a flat upfront price. Don't worry about adding another monthly charge to the already way too long list.

Pay once, and get updates and support for no additional cost. How products should be.

Custom pixel art AI model

Retro Diffusion comes with its own pixel art model, which returns results astronomically better than any competing model or AI. If you have tried to get Dall-e 2, Stable Diffusion, or even Midjourney to create accurate pixel art before, you know that they just don't get it.

The best part is, this model has been trained on licensed assets from Astropulse and other pixel artists with their consent.

Select the pixel art model and watch as near perfect pixel art is made in seconds!

This does NOT contain the models on the website! Image generations made locally will not be the same as online!

Check out my site for more images! https://astropulse.co/#retrodiffusiongallery

Consistency at any size

No matter what size or aspect ratio you're generating at, get consistent and creative results!

Convert any image to pixel art

Using the "Neural Pixelate" tool, easily turn images into pixel art versions with style accurate colors, or choose to keep the original colors!

Transform your art

Traditionally, resizing pixel art doesn't produce good results, as it either destroys detail when downscaling, or makes the image blurry or blocky when upscaling. "Neural Resize" allows you to change the size of pixel art image and generate entirely new details.

You can also use "Neural Detail" to add higher levels of detail to simple pixel art designs.

Control Generations

Using a unique pipeline, Retro Diffusion allows you to control lighting, colors, and other image features from an ultra-simple interface.

These settings are applied in-generation, not as post processing effects. This allows the images to change and adapt to the color and lighting conditions.

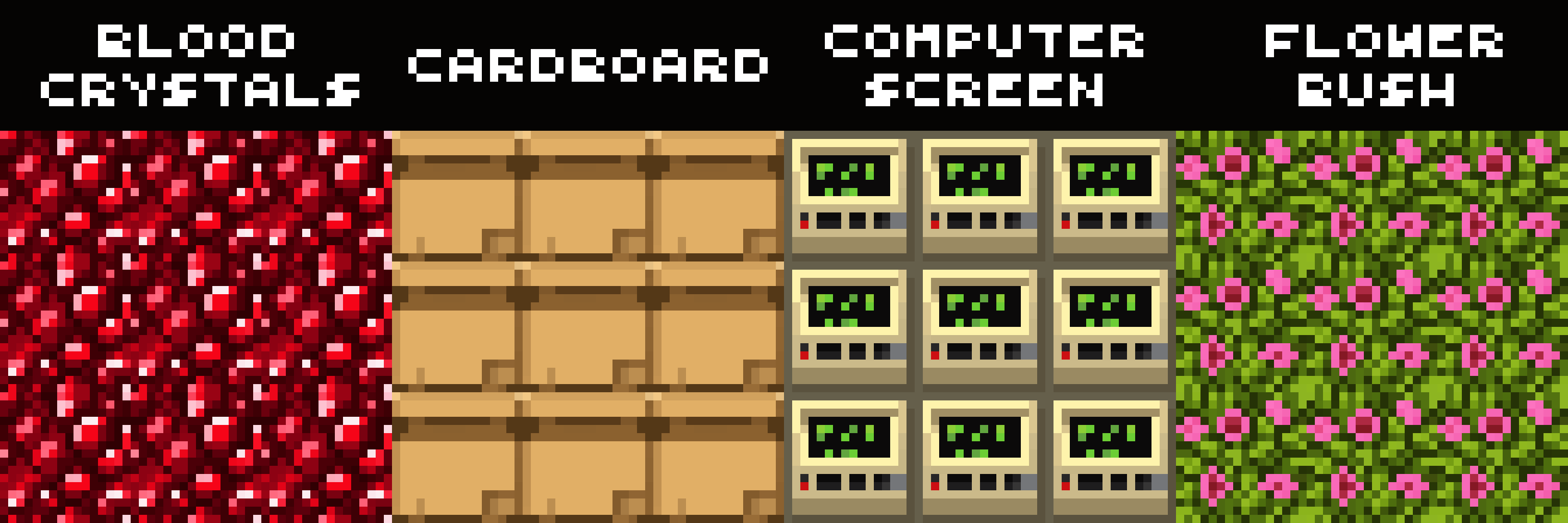

Minecraft assets

Several models have been developed specifically for Minecraft style assets, making developing good looking mods and resource packs easier than ever!

All these assets took under an hour to create, with most of the time spent generating images.

Additionally, if you enable the "Tiling" modifier, you can create beautiful seamless block textures in record time.

Generate texture maps

You can automatically generate material texture maps for tiles and import them into your game engine to give assets depth and texture properties:

Game items

Generate creative and interesting game assets just by describing them, no more need for dreaded 'programmer art.'

Palettize

Using the Palettize feature, you can easily reduce the number of colors in an image, or change the colors entirely in just a couple clicks.

Color Style Transfer

Convert images to alternative color palette, and maintain the same style.

Built in styles

Retro Diffusion has over a dozen different pixel art styles available at the click of a button. In addition to the "Game item" and "Tiling" modifiers there is:

These modifiers can even be applied at different strengths, or mixed together to achieve different styles!

Pixel Art Background Removal

Quickly and easily remove backgrounds in one click.

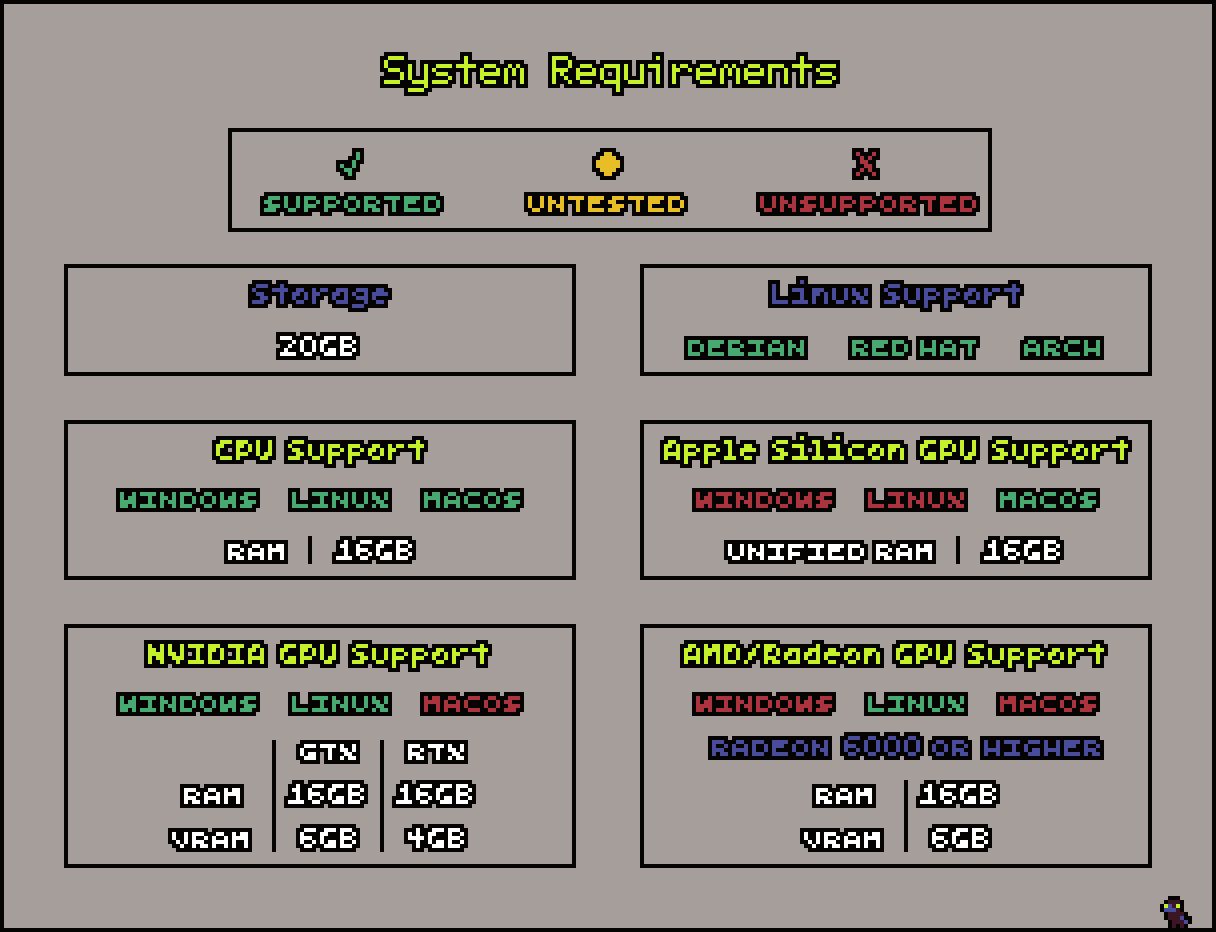

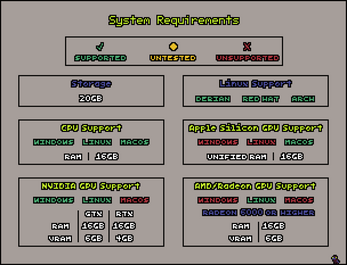

Compatibility

Ensure you have the latest version of Aseprite.

On setup, please make sure to read through the installation instructions thoroughly, and that you are connected to a stable wifi network (mobile hotspots will not work).

Refer to the chart below for exact compatibility information:

Learn more about your hardware and if you meet the requirements here:

System Compatibility

Don't meet the hardware requirements? Use the website and don't worry about putting strain on your own computer!

https://www.retrodiffusion.ai/

* Linux support is not guaranteed. The number of distros, environments, and the commonality of user system modifications makes assured support next to impossible. Retro Diffusion has been tested on stock Ubuntu, Mint, and Fedora. Customized versions of these distros may not be supported. If you have any issues with compatibility on Linux be sure to contact me directly via Discord.

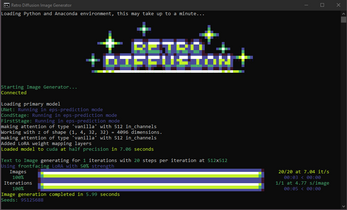

NOTE: The first generation will take a while, as it may need to install additional models.

Performance statistics

GPU:

Nvidia GTX 1050 TI: 64x64 at quality 5 in 2.5 minutes.

Nvidia GTX 960: 64x64 at quality 5 in 2 minutes.

Nvidia GTX 1660 Super: 64x64 at quality 5 in 2 minutes.

Nvidia RTX 3060: 64x64 at quality 5 in 5 seconds.

Nvidia RTX 3090: 64x64 at quality 5 in <2 seconds.

Radeon RX 6650 XT: 64x64 at quality 5 in 20 seconds.

Mac M1 Pro 64gb: 64x64 at quality 5 in 26 seconds.

Mac M2 Air 16gb: 64x64 at quality 5 in 50 seconds.

CPU:

Intel i5-8300H: 64x64 at quality 5 in 10 minutes.

Ryzen 2600X: 64x64 at quality 5 in 10 minutes.

Intel i7-1065G7: 64x64 at quality 5 in 5 minutes.

Ryzen 5800X: 64x64 at quality 5 in <4 minutes.

Aseprite not for you?

Check out the image generation website!

Future versions

Any future versions or patches of Retro Diffusion will be given to previous buyers at no additional cost. Make sure to check your email for new versions!

The current version is 14.0.0.

Previews and updates

The best place to go for any information on the current state of Retro Diffusion, or previews of upcoming content is my Twitter profile: https://twitter.com/RealAstropulse

Contact information

The best place to reach me is by joining the Retro Diffusion Discord server: https://discord.gg/retrodiffusion

Alternatively, use the contact form on my website: https://astropulse.co/#contactme

The website (retrodiffusion.ai) and extension are different tools, and use different models!

The website (retrodiffusion.ai) and extension are different tools, and use different models!

DO NOT buy the extension if you are trying to get the same results as the website, it is not possible!

| Updated | 23 days ago |

| Status | In development |

| Category | Tool |

| Platforms | Windows, macOS, Linux |

| Author | Astropulse |

| Tags | ai, Aseprite, extension, Pixel Art, plugin, stable-diffusion |

Purchase

In order to download this tool you must purchase it at or above the minimum price of $65 USD. You will get access to the following files:

Download demo

Development log

- Retro Diffusion Update: QoL and PalettesMar 10, 2025

- Retro Diffusion Update for January: Colors & PosesJan 28, 2025

- Retro Diffusion Update for July: Texture Maps & ModifiersJul 30, 2024

- Retro Diffusion Update for June: Palette Control & QoLJun 24, 2024

- Retro Diffusion Update for May: Prompt Guidance & Generation Size!May 19, 2024

- Retro Diffusion Update for April: ControlNet Expanded!Apr 30, 2024

- Retro Diffusion Update: ControlNet-Powered Tools!Mar 03, 2024

- Retro Diffusion January Update: NEW Composition Editing Menu!Jan 20, 2024

Comments

Log in with itch.io to leave a comment.

Hello, I have a question: are the images generated by the model permitted for commercial use? And I also have a question about how the rights to the resulting image are handled?

Yes, you own the images generated by it.

Hey there, I'm using this extension on a macMini, and I'm receiving the following error when I try to generate anything:

[[[[[

Importing libraries. This may take one or more minutes.

/Users/xxx/Documents/Aseprite_Retro_Diffusion/venv/lib/python3.11/site-packages/lightning_fabric/__init__.py:29: UserWarning: pkg_resources is deprecated as an API. See https://setuptools.pypa.io/en/latest/pkg_resources.html. The pkg_resources package is slated for removal as early as 2025-11-30. Refrain from using this package or pin to Setuptools<81.

__import__("pkg_resources").declare_namespace(__name__)

W0114 17:30:59.342000 49613 torch/distributed/elastic/multiprocessing/redirects.py:29] NOTE: Redirects are currently not supported in Windows or MacOs.

ERROR:

Traceback (most recent call last):

File "/Users/xxx/Library/Application Support/Aseprite/extensions/RetroDiffusion/stable-diffusion-aseprite/scripts/image_server.py", line 47, in <module>

import hitherdither

ModuleNotFoundError: No module named 'hitherdither'

Catastrophic failure, send this error to the developer.

]]]]]

Hey! This indicates there was an issue with the installation.

Can you tell me if you are using an apple silicon mac or an intel one?

Hi, I have a Mac mini with Apple M4 Pro

Great- Try removing all of the files associated with the extension, then installing again. Make sure you are using python 3.11.6, not a later version.

Hello, this is a really nice product. Even though I value text-to-image because it's convenient, I find myself needing image-to-image-type generation for a precise workflow. This is because I would much rather generate conceptual art in other image generators before converting the "right one" into pixel art. For this the variety of methods you provide are much appreciated ("image-to-image", pixelate, resize, etc). But in this scenario I feel like there is a bottleneck in the recognition step. Frequently the image generation fails to follow precise prompt details inside the "description" field or fails to recognize certain characteristics of the provided image. I was wondering if there could be a way of outsourcing only this step to a model that's more powerful. Maybe through an API key? Thanks.

I was wondering what is the difference from the Aseprite plugin and the website version? Also, I would love to see better results for Minecraft textures (items/tools/weapons and armors) the website version require a lot of attempts to make a good weapon (specifically for 16x16 and 32x32, the 128 and above are usually very solid). Thanks! Keep up the good work!

The extension and site are pretty much completely different. We're working on a couple updates to the mc items for lower resolutions, hopefully should be out soon.

Ah man, I just saw that AMD GPU won't work on windows after purchasing, so it's generating using my CPU. Is there any function that doesn't work without a GPU or all works except it takes more time? And do you plan to add support for AMD GPU with windows in the future? I have a RX 7900 XTX

It all works on cpu, just slowly. I do plan to add support for AMD on windows, as now pytorch supports that. I do need to update many other components and wait for the amd pytorch windows branch to be a bit more stable, so no ETA on when it will be ready.

Looking forward to it! thanks for the quick response. Additionally do you think is possible to add a function to create in-between frames? not animation from scratch, but adding frames to existing animations based on the previous and next frame.

No, in-betweens are actually more complex than just regular animation. Its something I'm working on, but it will only be available on the site due to the massive size of the models involved.

do you have a video tutorial on how to buy and install?

in the future can you update more languages for users with poor English, if so please add Vietnamese

can i draw it at around 720*1024? and is 3060 12gb ok to draw at that high resolution? and is rtx 2080 ok?

No 720x1024 is much too high resolution for pixel art. You can create smaller pixel art and upscale it. 3060 12gb and 2080 are both suitable cards.

Does it support NSFW?

It is not trained for NSFW, but since its running on your own machine it will not specifically block it.

thank you, i will look into it

Some Illustrious models are pretty Good at Pixel art, I know SDXL wasn't a big enough upgrade from SD 1.5, but since Flux is really never gonna be optimized enough for consumer hardware maybe this could be the next step?, I know Illustrious is still SDXL but it is giving decent results.

Do you think this could be used to accurately make isometric art? (Such as furniture for a diorama style room).

Can you add paypal on your website? I can't pay by card or Link

Unfortunately not at the moment. Paypal is extremely difficult to work with so we haven't been able to add them yet.

Hi, this looks interesting, but is there a feature to clean up artifacts like doubled pixels, broken lines, jaggies etc.? I often find myself needing to clean up the results of warping or rotating a sprite with Nearest Neighbor.

On top of that, if the API has said capability, I'm wondering if it'd have any limitation with applying a potential clean up process not just to easily recognizable things (people, animals, items etc.) but rather to individual parts that are not readily identifiable, such as character limbs. I've attached a sample sprite of a limb which shows what I'm talking about, with warping applied to a starting sprite piece, then cleaned up manually:

Thanks.

It does have a filter for cleaning artifacts, but it is not like doing it by hand. Repairs like you describe require far too much "intelligence" from the AI models. The filter it has helps with removing orphans, cleaning lines, and repairing some colors. You can find it in "Sprite" -> "PixFix Filter"

Also check out this extension- it makes rotations far cleaner than NN rotations. https://astropulse.itch.io/clean-rotate-for-aseprite

Thanks for the reply. I see.

I have another question or two that might be better to put in their own threads, which I’ll do later on.

Thanks for pointing to that rotation tool, I’ll check it out.

In any case, I’ll be following the development of this API.

Feel free to drop by the discord and ask any questions there, much easier to respond and keep track of things. https://discord.gg/retrodiffusion

does this support animations?

It does, but only via the website API, which requires credits to use. You can learn more about that here: https://www.retrodiffusion.ai/

Rage Bait

No you

I'm checking in about Retro Diffusion's hardware requirements. I saw in the docs that Radeon graphics cards aren't supported on Windows.

I'm wondering if it's a different story if I'm running Radeon within a Linux setup through Windows Subsystem for Linux (WSL). Would Retro Diffusion work then?

Just curious if that's a viable option. Thanks for your time!

This is not a viable option due to how the program needs to be run on the main system.

Should run well on M1 MacBook Air 8gb?

No, that is below the minimum requirement. See the compatibility graphic on the page.

is this a one time payment or is it credit base

i know it says one time payment so i purchased it bout i had to make an account online and use credits from the webpage to generate anything in the aesprite extension

The extension is a one time purchase and it runs locally, all of the tools for that can be found in the Sprite menu, or in Help, Retro Diffusion Scripts.

It sounds like you may have been confused and used the "Retrodiffusion.ai" tab, this section lets you also use the website, which requires credits. This is why they are labeled "API Text to Image", because they are using the website API. But it is only an option, not something you need to generate with.

You can use all other tools in the extension without signing into the site, buying credits, or even having an internet connection.

Is locally deployed software the same as cloud based websites in terms of functionality?

No, they do not use the same models or the same code base even. Completely different products. The website runs models that are extremely large and can only run on server grade gpu clusters.

I had a few questions about this one. I already saw it coming last year, but I didn't look into it much anymore since I was learning to do AI on my own with image and video generation and so on.

I do have asesprite and I was wondering to buy this pack with the extension along with it, $50 for just the model vs $65 with asesprite extension, I am more using photoshop for my artwork then asesprite but might be nice is it possible to add it later on or ?

But I do read that the model on the website is different ( I presume just with features and animations not the art itself in the model or do you provide also those models updated with animation walk? or just using control net part on the website feature for the animation so it's all the same animation with the pose ?)

Or are you possible using Lora's as well and do you add them in the package or?

I rather do my own generation and I can make more and as much as I want ( costing credits on the website )

If I just use my own stable diffusion or auto1111 or comfyUI whatever then I can just use this model along with control net and make my own animations since it seems you are doing the same on your website?

I really like the animation that I seen but it has not been released so long and I was also hoping for more animations like death or climbing / dashing / dodge and also attacks ( I did read something about you was working on it? ) but I presume I could archive that with using control net and your model in my own stable diffusion?

I did found something named "voidless.dev" on a YT short yesterday showing vector artwork but doing animations with just a prompt, it seems nice maybe it could do pixel artwork as well but I need to test it out still.

Or do you think you might update the extension in the future for asesprite with animations coming along or that would be only "part" of your website and people need to figure it out themself how it works with control net ( I know how control net and such works )

I also read some where the animations was "max" 48x48 size on your website? i could get bigger ones when I try it on my own I guess.

Thanks for the reply so far.

The animations are not done using controlnet, they are made using their own specific model. This is also why other actions haven't been added yet, we dont have the data to train a model for it

If you are looking for results similar to the site, they can't be achieved locally. The extension does give you more control, and it has controlnet integration, you can load your own loras, etc. But it is not the same as the site, and it likely will never have the same full capabilities.

Hey this is really cool!

Can it do sprite sheets for animation?

Hey! The extension cannot, but the website can https://www.retrodiffusion.ai/

Hey there! From some of the older comments I saw that animations / sprite sheets weren't supported yet. Did I understand correctly that it's now working only on the website version? If so are there plans to support this also for the extension? Because I was thinking of getting this extension since I'd love to generate the images locally!

Hey! Animated sprites are available in the extension, but only via api (they still cost credits like the site).

The model that enabled them cannot be hosted locally due to both size constraints (it is absolutely massive, beyond what is even reasonable for a 5090 to run) and licensing issues, as we cannot distribute the model for local use.

The models hosted on the site will not be added to the extension, for the above reasons.

Ahh alright! Thanks for the clarification!

I purchased the product on Gumroad, how can I migrate to here?

No, but you will be able to move it to https://www.retrodiffusion.ai/ once we have the store set up there.

I am the same. Are the contents of the two stores the same now? If it is different, when can I migrate?:)

Store is still not set up, you'll get an email when it is.

Is there an expected time that will be completed next quarter or this year?

Hello, I have a question and it is if the complete model also includes the retro diffusion model because in Gumroad they are 2 different things: the retro diffusion extension for Aseprite and the retro diffusion model.

Hey! Yes it does, you can access them from "Help" -> "Retro Diffusion Tools" -> "Download Models" inside the extension.

Hi, me and my brother who both work full time jobs and don't have much spare money, really want to get into indie game development. However, the biggest issue for us so far was sprites and sprite animations creation which takes forever with full time jobs and families, and since we are in 2025 I figured that AI should already handle this, especially considering that 2d pixel art should be easy, but I still did not see any realistically working models or services, they all do weird stuff and absolutely ignore half of what I mention. I am wondering if your plugin (full version) can accurately generate animation sprite sheets from a static sprite? I was satisfied with the result of chatGPT 4o generating me what I asked for from a hand sketch (attached). However, it could not properly (maintain scale, flip everything logically) do basic manipulations such as generating the left-facing model (thus flipping the arms, with the drill arm being closer to the viewer). Can your plugin properly do that, and then generate animation frames sprite sheet for movement, drilling and etc?

Contrary to how it seems, pixel art is one of the most difficult things for AI to do accurately, because it requires way more precision and accuracy than almost any other art form.

The plugin can't create sprite sheets like this, and certainly not without a lot of leg work. You're probably better off looking into converting 3d animations to 2d sprites, that takes a lot less effort.

Hello there. Fantastic work with this!

I'm planning a photoshoot where a person will be modelling for a sprite sheet. Once I've compiled that into a sprite sheet, could I run that through this? Or would I need to run each image through the model?

For the whole sprite sheet, if that works, could I also change things like a sword into a mace? Or a handgun into a steampunk rifle for instance?

Thank you :)

Hey! The models in this tool aren't quite capable enough for animation like that, and especially for changing a specific item while leaving other elements intact.

You're welcome to try of course, but it will be an up-hill battle :)

Error when trying to generate something:

Importing libraries. This may take one or more minutes.

ERROR:

Traceback (most recent call last):

File "C:[blablablamyfolder]\Aseprite\extensions\RetroDiffusion\stable-diffusion-aseprite\scripts\image_server.py", line 6, in <module>

import torch

ModuleNotFoundError: No module named 'torch'

Catastrophic failure, send this error to the developer.

Hey, you need to run the setup script from file -> setup retro diffusion.

If there are errors, it did not work. For further troubleshooting join the discord server: https://discord.gg/retrodiffusion

Hi! When I try to setup the script, it gives me this error:

ERROR:

Traceback (most recent call last):

File "C:\blablablafolder\Aseprite\extensions\RetroDiffusion\python\setup.py", line 667, in <module>

import torch

ModuleNotFoundError: No module named 'torch'

Install has failed, ensure requirements are installed.

If issues persist, please contact the developer.

Joined the discord and found the solution on the forums, so here it is if anyone is having the same problem:

- Uninstall python 32 bits (keep only the 64 bits)

- On Aseprite go to Help->Retro Diffusion Tools->Open python Venv folder

- Delete all the content of the opened folder

- Run on Aseprite File->Setup Retro Diffusion again

Is this version updated like the website or is it very old?

It is updated frequently, about every 1-2 months. But it is not the same as the website, it uses completely different models. The models on the website can't run on normal gpus, they are far too large.

Hello, how are you? It's been more than 3 or 4 months and there's been no update!

Haven't had any updates to push. Right now I'm working on AMD support for windows.

Hello! I'm trying to make very simple pixel art characters like the ones found here:

https://www.epicmafia.com/roles

Is there any way to provide those sprites as a guide and make similar ones, with different roles?

This style will be difficult to get without training for it. I recommend checking this guide out, it tells you how to train your own "lora" model that you can use with retro diffusion to get the exact style you want. https://docs.google.com/document/d/1jBjn7xfGzGmRpvap43hMvbNq0DLCDJWa30JG-1Esx3o/...

Hi,

I'm considering buying this. Can I expect this to work tolerably well (and without any library issues obviously) on a 2024 M3 Macbook Air?

Unfortunately no, that model does not have enough memory to run this program well.

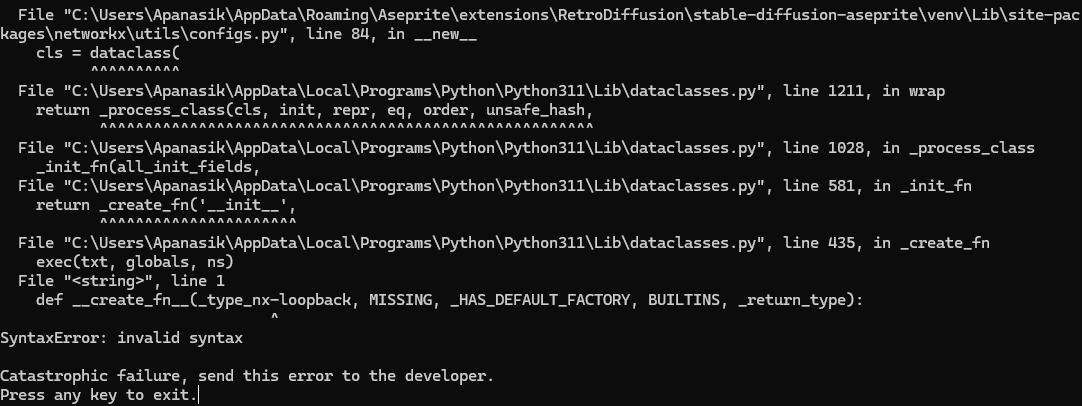

I've installed last torch: https://download.pytorch.org/whl/nightly/cu128

But still got error:

Any help?

Retro diffusion uses its own virtual environment, so you need to do all of the python library management there, you can find the venv location in "Help" -> "Retro Diffusion Tools" -> "Open Python Venv Folder"

Thanks for replay! I've tried to update manually all torch packages in local directory, but looks like there are some incompatibility with them.

Ah, thats unfortunate. We'll be supporting 50xx cards officially once pytorch has stable support, but until then you'll need to hold tight :)

I purchased this tool. Can you please add a license to the itch.io page stating that it can be used for commercial content production? This is important.

Hey! The license is included inside the extension files itself, but I've now also added it as a "demo" here.

It's a bunch of legal jargon but it essentially boils down to the code and models are owned by Astropulse LLC, and not able to be used commercially, but the outputs of the code and models are owned by whoever creates them (you) and are able to be used commercially since you have the rights.

Thanks. This is helpful.

hi! 👋 is there any upcoming sales?

Reading below it appears this cannot be used to make sprite sheets, is there every going to be that capability or should I just git gud?

Right now I'm doing everything my little programmer fingy's allow me to, cutting up sprites into pieces and made a script to re-composite them in engine, this way I can have 1 head and a ton of bodies/hair/etc.

But if this could one day make sprite sheets that'd save a life.

The extension probably won't be able to do sprite sheets any time soon, but on the website (https://www.retrodiffusion.ai/) we've nearly got a model released that can do walk cycles like shown here: https://x.com/RealAstropulse/status/1896924271659884854

Hi Astro, this project looks incredible! I am planning on purchasing it, but I was curious if the custom model you created would be accessible for me to run through separate programs after purchase? I use a lot of my own custom setups in ComfyUI, so I would get more out of the purchase if I could riff on it with my own variations.

Yep! You can access those models from inside the extension through "help" -> "retro diffusion tools" -> "download models"

Excellent, thank you for creating this. It's tough to find reasonably ethical models, so I really appreciate the work you're doing here.

i'm using Mac M3 MAX. My first locally generated image was okay-ish, but from second and on, all that's generated is just Color Noise. any idea?

I haven't heard of this issue before, would you join the community discord server so we can figure out whats going on more closely? https://discord.gg/retrodiffusion

I'm having a weird problem. When I try to run the install script, it detects my Geforce 1080 TI card as an "AMD GPU". I know cuda works as I've played around with other AI models using it. How do I fix this?

Edit: using windows 10 if that's of any relevance. I also have the latest geforce drivers installed.

Hey! There can be false detections sometimes because of how windows sorts graphics outputs, especially if your CPU is amd and has integrated graphics.

The comments here isn't a good place for troubleshooting, so if you'd join the community discord server and make a post in the errors channel that would be great: https://discord.gg/retrodiffusion

Will do! Thanks for the swift response.

You would need to use Linux with an AMD gpu. Additionally only more recent AMD cards are supported, 7000 series and up, with the exception of the 6700 and 6800 which are also supported.

Hi Astropulse, i have a RX 6600. It isn't supported, then? It should have 8GB of VRAM, if i'm not wrong.

It is not supported by AMD's AI drivers in pytorch

can this make animations?

It cannot make animations yet, though it is something we are working on.

When you say make animations, is this more like animations within Aseprite or Sprite sheets?

You can make animations by hand in Aseprite, but Retro Diffusion can't generate animations or animated spritesheets.

I see, would it however do well enough to recreate the same say character but in another pose? Or would AI completely create something new?

It creates something new, this model doesnt have the consistency to make the same characters in different poses.

Will this have a date when it stops working?

No, it runs locally on your own hardware. It will only stop working if you delete the files or other things it relies on.

best tool on itch.io ever!